November 17, 2025

The Fifth Industrial Revolution represents a significant shift from the Fourth Industrial Revolution’s emphasis on automation and cyber-physical systems. While the Fourth’s connected machines, the Fifth centers on human-AI collaboration, blending human creativity with artificial intelligence to solve complex challenges. This partnership promises unprecedented innovation, but it also creates new vulnerabilities. The same AI tools designed to augment human capabilities are being systematically exploited for criminal gain.

- ChatGPT language models can improve workplace productivity while also powering sophisticated phishing campaigns.

- Image-to-video technology is transforming content creation by easily turning static images into engaging videos, but it also enables identity fraud.

- Voice cloning has evolved from a home assistant novelty into a tool capable of defeating voice authentication and defrauding organizations on a massive scale.

These tools are not only powerful, but they’re also readily available; all you need is an internet connection. Anyone can access ChatGPT and similar language models using free accounts and without requiring technical expertise. Image-to-video platforms offer free trials and straightforward interfaces. Voice cloning services cost as little as $5 to $10 per month on legitimate platforms, while criminal forums offer specialized attack tools for even less. This ease of access means sophisticated identity fraud no longer requires deep pockets or specialized skills, just a willingness to exploit readily available technology.

As the founder of Salesforce, Marc Benioff, said, “I see a crisis of trust in technology… In the Fifth Industrial Revolution, we’re going to have to have solutions that restore that trust”.

Innovation Hijacked

The pace of AI advancement is incredible. In just the first week of August 2025, the industry witnessed 12 breakthroughs. From Google’s Gemini 2.5 Deep Think to Meta’s Superintelligence Labs, culminating in Apple CEO Tim Cook declaring AI “as big or bigger than the internet.”

Yet this same innovation is being systematically hijacked. Bad actors weaponize each breakthrough faster than organizations can adapt their defenses. OpenAI CEO Sam Altman’s warnings should give every cybersecurity team pause: he speaks of an “impending fraud crisis” and admits “no one knows what happens next.” His frank assessment captures the central challenge; criminal innovation now evolves at exponential speed.

In testimony before banking regulators, Altman called financial institutions still relying on voice authentication “crazy.” Research from the University of Waterloo validates his concern: a practical attack methodology demonstrated the ability to bypass security-critical voice authentication systems with success rates of up to 99%. With millions of banking customers depending on voice authentication for account access, this near-perfect attack success rate represents a systemic security failure.

Uneven Playing Field

AI has worsened the cybersecurity skills gap, creating an uneven playing field. While it takes years for cyber defenders to gain expertise, AI has quickly democratized the threat landscape, enabling less-skilled adversaries to launch sophisticated attacks using commonplace models. Human vulnerability compounds this dangerous asymmetry when it comes to identity security: only 0.1% of people can correctly identify synthetic media, yet 57% believe they can spot deepfakes. This overconfidence makes identity verification particularly susceptible, as attackers can effortlessly impersonate voices and clone faces with off-the-shelf tools, while defenders still rely on outdated, static verification methods.

The criminal group Scattered Spider exemplifies this dangerous evolution of cybercrime and human fallibility. They exploit the human element by targeting helpdesks and account recovery systems with a range of techniques. These methods include;

- Phishing to steal initial credentials.

- “Push bombing” to overwhelm targets with multi-factor authentication alerts.

- SIM-swapping attacks to hijack phone numbers.

The solution necessitates rethinking how we verify identity. When bad actors can easily bypass traditional authentication, we must move to something that they cannot democratize: genuine human presence.

The Core Problem: Why Traditional Security Is Failing

When criminals increasingly target the very foundation of our digital lives, our identities, the core problem becomes clear: shared secrets can always be shared. Any system trusting what someone knows, possesses, or says will encounter inherent vulnerabilities to AI-enhanced social engineering.

Traditional authentication methods face systematic weaknesses:

- Passwords can be stolen, guessed, or phished

- Documents can be forged or compromised

- Knowledge-based verification can be researched or socially engineered

- Voice authentication can now be cloned with AI

The fundamental flaw is that these methods rely on information that can be replicated, stolen, or synthesized. As AI democratizes sophisticated attack capabilities, this vulnerability gap widens daily.

The Solution: Genuine Human Presence

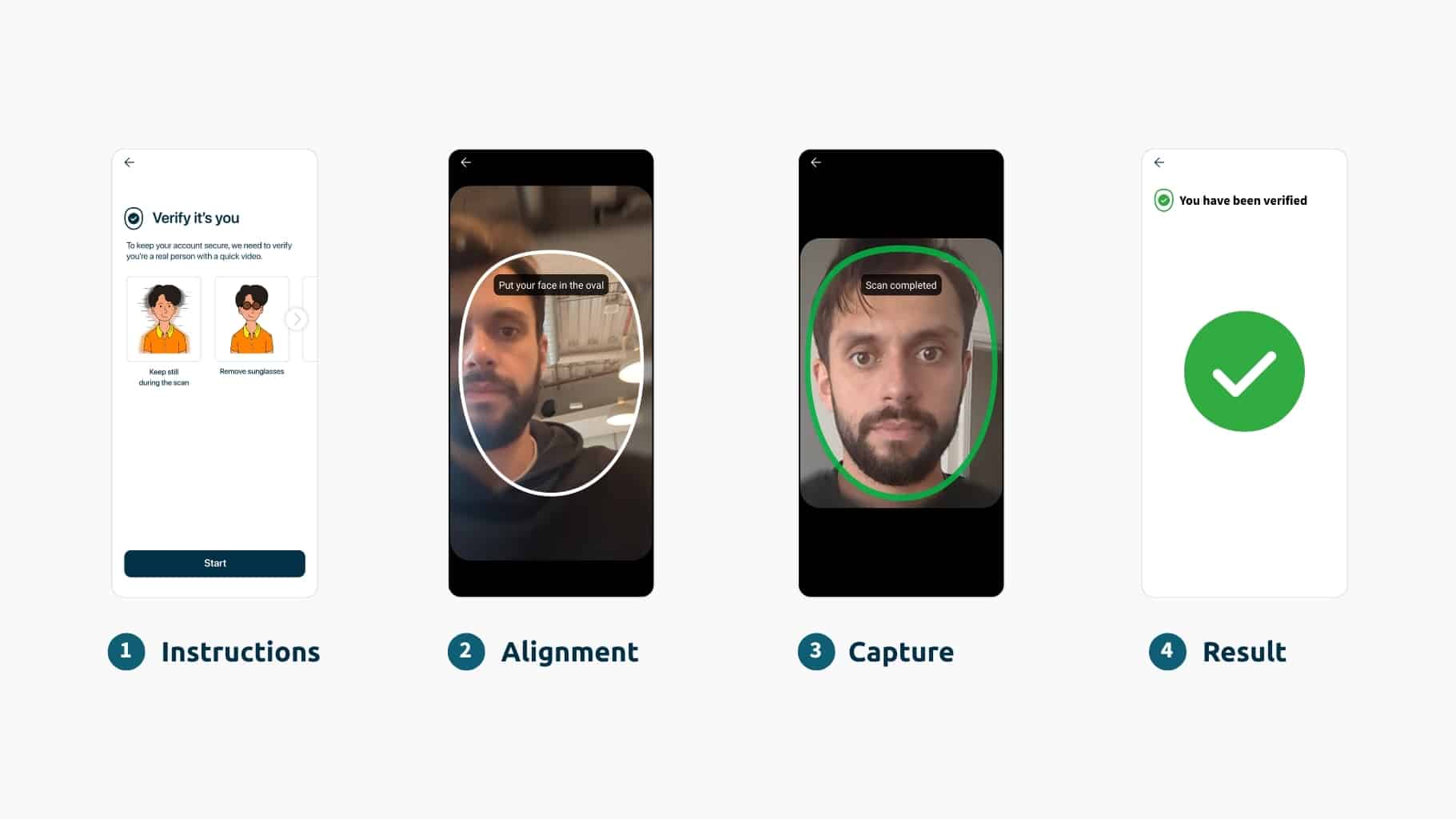

The answer lies in moving beyond what someone knows to proving who someone actually is through genuine human presence. This goes beyond standard liveness solutions, and requires science-based biometrics that create an unbreakable link between digital identity and physical reality.

This approach fundamentally differs from traditional methods:

- It verifies genuine presence: critical for remote verification in real-time

- It defeats synthetic attacks: ensures even high-quality deepfakes can’t bypass authentication workflows

Not all biometric solutions are built AI-resilient. Many are static and struggle to keep pace with the rapidly evolving sophistication of deepfakes. An effective defense demands ongoing monitoring, proactive threat hunting, and rapid response capabilities that adapt without disrupting users.

As Gartner recommends, organizations must “invest in a threat intelligence team focused on tracking emerging deepfake-related threats”. This approach combines adaptive liveness detection, continuous threat monitoring, and expert human oversight to maintain privacy. Ultimately, as Altman emphasizes, “Humans have gotta set the rules”.

The Path Forward

The Fifth Industrial Revolution’s promise of human-AI collaboration is now testing whether humans can maintain agency in an increasingly synthetic world. To build the trust frameworks essential for our AI-powered future, we must evolve our defenses beyond outdated methods.

Science-based biometrics provide this critical foundation by creating an unbreakable link between a digital identity and a genuine human presence. This approach ensures that no matter how sophisticated the attack, the question, “Are you really you?” has a definitive and verifiable answer. This is the only way to restore the trust that is paramount to navigating the next revolution of technology.