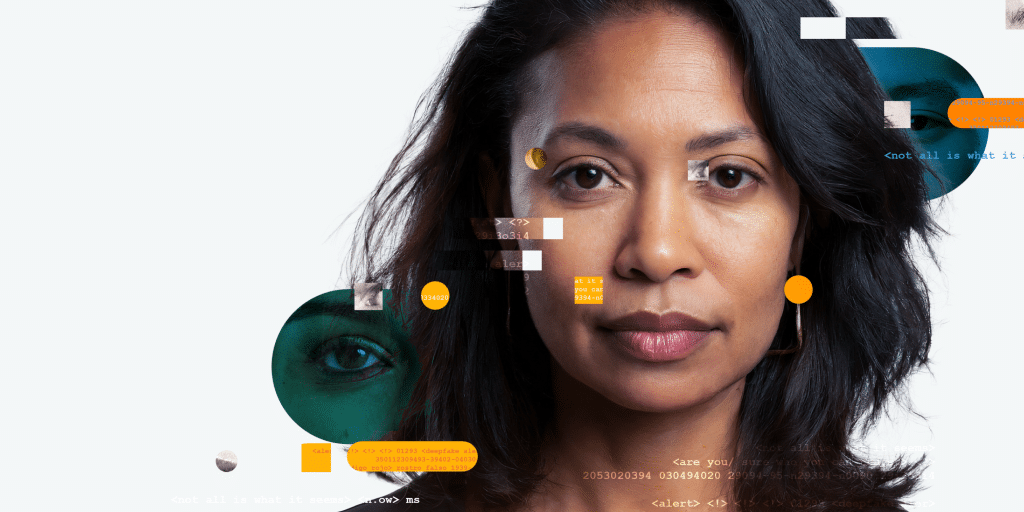

Identity Crisis in the Digital Age: Using Science-Based Biometrics to Combat Malicious Generative AI

Protect Your Organization Against AI-Driven Identity Fraud

Artificial Intelligence is transforming how we work and live—from early cancer detection to more efficient government services. But the rise of generative AI also introduces serious risks: impersonation, disinformation, and identity fraud are on the rise.

The stakes are high. Remote identity verification can no longer rely on outdated methods.

Key Insights:

-

Biometrics are the most reliable solution: Face verification ensures remote users are who they claim to be—and are genuinely present in real time.

-

Not all solutions are created equal: On-premise systems and basic fraud detection tools are vulnerable to sophisticated AI attacks.

-

Science-based security is essential: Leading solutions combine one-time biometric verification with active threat monitoring and intelligence sharing to stay ahead of emerging threats.

Why it matters for your organization:

-

Protect sensitive systems and data from AI-driven identity fraud.

-

Ensure trust in remote transactions and interactions.

-

Stay ahead of emerging threats with proactive, science-backed verification.

Secure identities. Build trust. Defend against the future of fraud.