January 11, 2024

Remote identity verification is one of the most pressing challenges of our increasingly digital era. Confirming a person’s identity without seeing them physically, in person, is a need that continues to grow in both importance and difficulty.

Among the most insidious threats to remote identity verification is the use of Generative AI created deepfakes. Deepfakes aren’t intrinsically harmful but can pose significant security threats. As it’s now impossible to reliably distinguish between synthetic imagery and real imagery with the human eye, AI-powered biometrics have emerged as the most robust defense against deepfakes – and, therefore, the only reliable method of remote identity verification. In fact, combating them requires continuous research and mission-critical solutions with evolving security. People value the convenience of facial biometric verification, too – 72% of consumers worldwide prefer face verification for online security.

However, as biometric technology advances, malicious actors seek out new and more sophisticated methods to compromise these systems. It’s important to remember, though, that deepfakes are not a threat to biometric security – they’re a threat to any method of verifying a human’s identity remotely.

The Deepfake Threat: A Look Inside The Rise of Deepfake Technology

In the beginning, deepfakes were just harmless fun, with people creating videos and pictures for entertainment purposes. However, when combined with malicious intent and cyber attack tools, they quickly transform into sinister threats. Deepfakes have quickly become a very powerful way of launching cybersecurity attacks, spreading fake news, and swaying public opinion. You’ve probably already come across a deepfake without even realizing it.

Deepfake technology involves the use of tools such as Computer-generated imagery (CGI) and artificial intelligence to alter the appearance and behavior of someone. Machine learning algorithms work to generate highly realistic synthetic content to imitate human behaviors, including facial expressions and speech. When used maliciously, this technology can be employed to create counterfeit identities, imitate people, and gain access to secure locations, for example.

Due to the sophistication of the technology, deepfake content often appears highly realistic to trained humans and even some identity verification solutions – making it difficult to discern from genuine from synthetic. The rapidly evolving pace of AI means deepfakes are continually evolving, too – they’re not a static threat.

Deepfake Detection: Evidence Establishes that Humans Cannot Reliably Spot Deepfakes

We’ve compiled a list of studies that highlight how humans are simply not a reliable indicator for spotting deepfakes:

- A 2021 study titled “Deepfake detection by human crowds, machines, and machine-informed crowds” found that people alone are much worse than detection algorithms. Detection algorithms even outperformed forensic examiners, who are far more skilled at the task than the average person. The study also found no evidence that education/training improves performance.

- Another 2021 study called “Fooled twice: People cannot detect deepfakes but think they can” found that people are less capable of identifying deepfakes than they think; that people are not reliable at detecting manipulated video content; that training and incentives don’t increase ability; that people have a bias towards a False Rejection Rate; and finally that people are particularly susceptible to being influenced by deepfake content.

- In 2022, a group of security researchers found that person-to-person video call verification could be easily overcome with OS software and watercolor (i.e. digital art manipulation). This shows that human operator video identification systems are easily overcome with basic everyday approaches and very little skill – sometimes not even deepfakes are needed to fool humans.

See if you can spot a deepfake in our interactive quiz!

How Deepfakes Threaten All Online Identity Verification

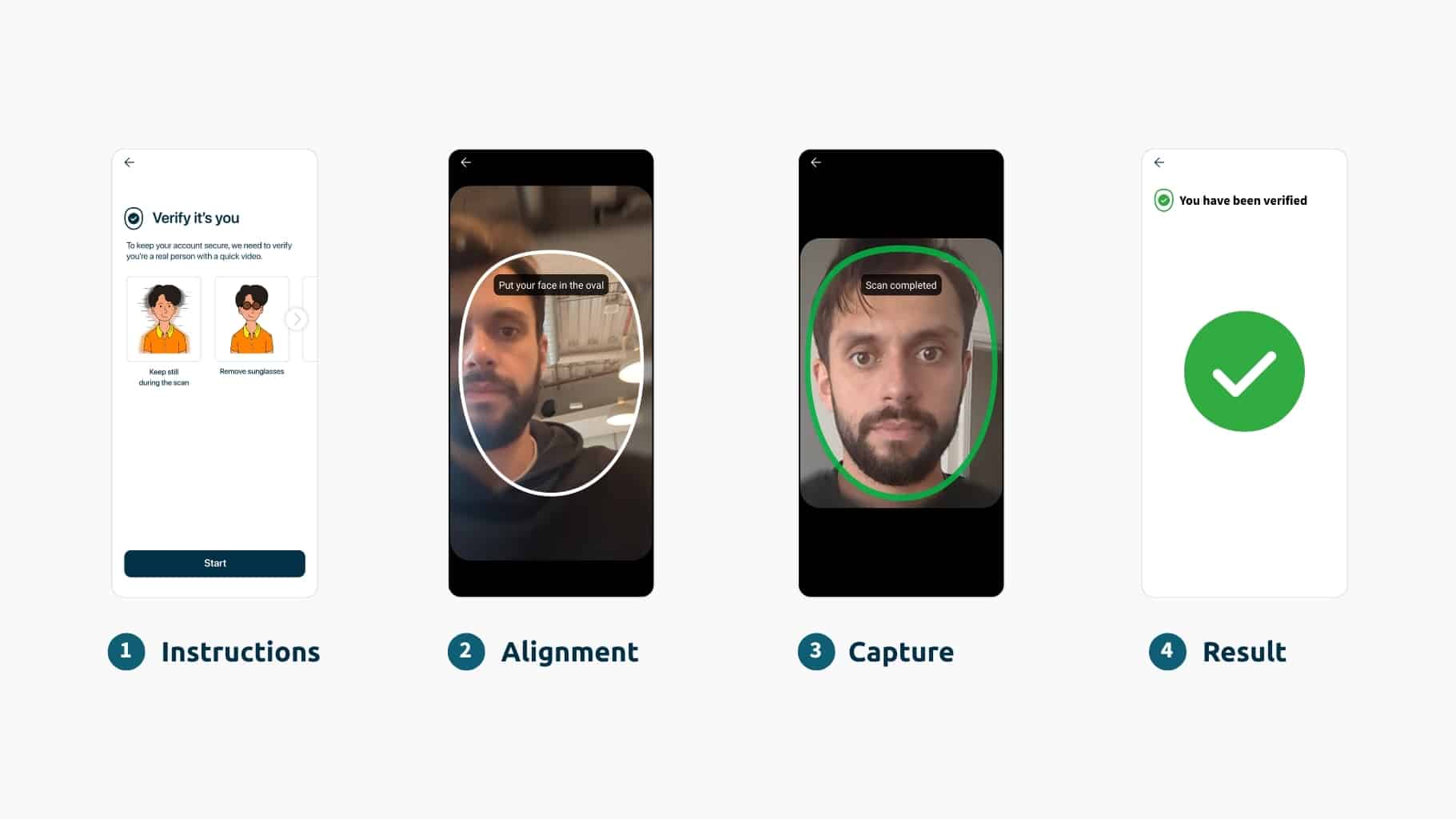

To reliably verify identity remotely, you’re going to have to see the person and their identity document. Video call verification – sometimes known as video identification – refers to the process of talking to a live person over video calling software in order to prove who you are. But it is an undesirable option – not least because it’s inconvenient, labor and cost intensive, and cannot be automated (so is harder to scale).

Deepfakes can be injected in a live video stream anyway, so they’re an even more critical threat to video verification. As we’ve established, humans are less reliable at detecting deepfakes than biometrics. This is why we caution organizations to remember that deepfakes and other forms of Generative AI are not a “biometric problem”; they’re a remote identity verification problem.

Remote identity verification is critical for the majority of organizations that conduct business online. It’s non-negotiable in many instances. Against the threat of deepfakes, organizations must utilize AI for the good of cybersecurity (i.e. AI-powered biometrics).

Can’t I Verify Users With Another Biometric Method, To Avoid Deepfakes Altogether?

You might next wonder: “why aren’t other biometric methods a suitable alternative for identity verification? Can’t you just use iris or voice authentication, so you don’t need to deal with deepfakes at all?”

Biometric face verification specifically has emerged as the only reliable method of remote identity verification because:

- Other biometric methods cannot verify identity. They can only authenticate it. This is because your voice, iris, and so on are not generally on any of your identity documents (unlike your face). So you have nothing trusted to verify the biometric data against – no source of truth. It’s possible in rare cases – maybe an organization has access to an official fingerprint data, for example. But it’s not scalable like face verification is. The same goes for traditional methods, such as passwords and OTPs, which have entirely failed to keep users secure online. You can’t be 100% certain of someone’s identity just because they know something (a password) or own something (a phone with a code on).

- AI-driven cloning is a threat to all biometric methods. A voice, for example, is considered the easiest biometric to clone. You can read more about the threat of voice cloning in this article and the disadvantages of voice biometrics here.

Safeguarding Against Deepfake Attacks

When we talk about the threat of deepfakes, it’s to stress how critical a robust facial verification solution is to defend against them, and to educate organizations on the consequential differences between the solutions available.

As the 2023 Gartner Market Guide articulates, “Security and risk management leaders must make deepfake detection a key requirement, and should be suspicious of any vendor that is not proactively discussing its capabilities”.

To mitigate the risks associated with deepfake attacks on biometric systems, several measures can be implemented:

- Multi-Modal Biometrics: Combining multiple biometric methods, such as facial verification and fingerprint scanning, can enhance security by making it harder for attackers to fake multiple modalities simultaneously.

- Liveness Detection: Implementing science-based liveness detection checks can help differentiate between real biometric data and synthetic representations, such as deepfakes which lack vital signs of life. Learn more about liveness technology here.

- Continuous Monitoring: Biometric systems should incorporate continuous monitoring and anomaly detection to identify unusual patterns or behaviors that may indicate a deepfake attack. Organizations must embrace advanced techniques that can adapt to the rapidly accelerating landscape of cyber threats (not ones that rely on static defenses – the solution is an evolving service rather than software).

Technical biometric solutions like science-based liveness detection and active threat intelligence will take center stage in identifying synthetic media. However, human research and critical thinking skills are still essential when it comes to identifying potential threats. The ultimate solution lies in combining the strengths of both humans and automation to create a foolproof solution – as iProov does, utilizing our mission-critical biometric verification with our iSOC capabilities.

The question of “how can we be sure of someone’s identity online?” is an extremely important and serious topic, and it’s not going away.

Learn more about how iProov specifically defends against deepfakes in this blog post and read our report, Deepfakes: The New Frontier of Online Crime.