August 26, 2023

You’ve probably seen a deepfake video – even if you didn’t realize it. Computer-generated Tom Cruises have been popping up all over the web during the past few years. Mark Zuckerberg is another common target, with videos circulating of him saying things he didn’t actually say. Then there was Channel 4’s infamous deepfake of the Queen, delivering an alternative Christmas message in the UK.

Deepfakes aren’t a new problem, but the tools needed to create them are becoming more readily available and more advanced. Ultimately, deepfakes are dangerous because they make it difficult for us to trust what we see and hear online. The potential for misuse and the threat to consumers, governments, and enterprises cannot be overstated.

Despite the growing threat of deepfakes to society, many people still don’t know what a deepfake is. To better understand the deepfake landscape, iProov surveyed 2,000 UK and US consumers in 2025, exposing them to a series of real and deepfake content. We also previously surveyed 16,000 people across eight countries in 2022 (the U.S., Canada, Mexico, Germany, Italy, Spain, the UK and Australia), asking them several questions about deepfakes.

In this article, we’ll share our new data, compare it to our results each study, and discuss solutions to the growing threat.

2025 Deepfake Study Results: Most Consumers Can’t Identify AI-Generated Fakes

- Only 0.1% of participants could correctly identify all deepfake and real content (images and videos) – even when specifically told to look for fakes.

- 22% had never even heard of deepfakes before participating in the study.

- Participants were 36% less likely to spot fake videos compared to fake images

- Over 60% of people were confident in their deepfake detection abilities, regardless of whether they were right or wrong (showing dangerous overconfidence)

- Less than 29% take any action when encountering suspected deepfakes

- Globally, 71% of respondents say that they do not know what a deepfake is. Just under a third of global consumers say they are aware of deepfakes.

- 74% worry about societal impact of deepfakes

- Only 11% critically analyze source and context to determine if something is a deepfake

Read the full study here and take the deepfake quiz here to put your skills to the test.

How Many People Know What a Deepfake Is?

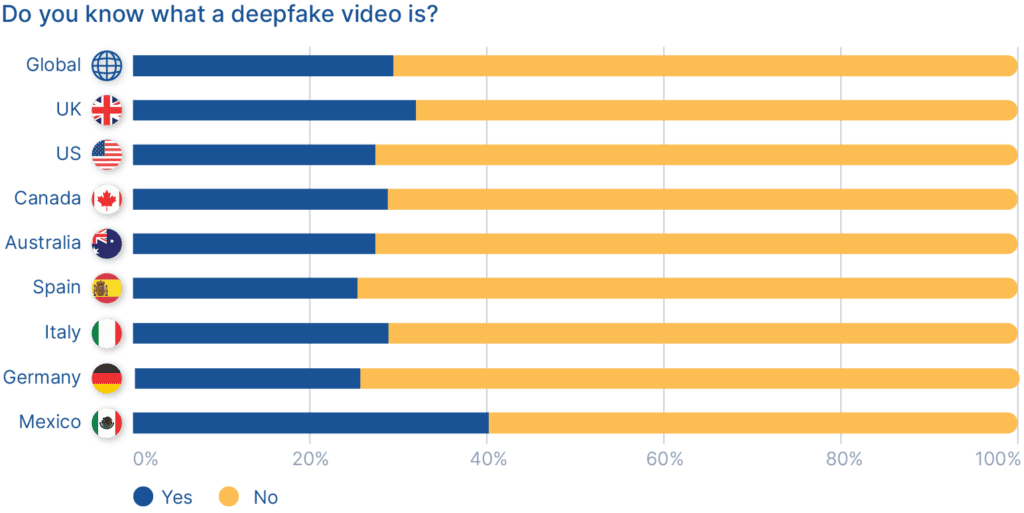

We asked: “Do you know what a deepfake video is?”

- Globally, 71% of respondents say that they do not know what a deepfake is. Just under a third of global consumers say they are aware of deepfakes.

- Mexico and the UK are most familiar with deepfakes: 40% of Mexican respondents and 32% of UK respondents say they know what a deepfake is.

- Spain and Germany feel the least educated about deepfakes: 75% of respondents in both Spain and Germany answered “No”.

Summary:

The percentage of people who know what a deepfake is has more than doubled since our last survey – in 2019, only 13% said they knew what a deepfake was, compared with 29% in 2022. On the one hand, it’s positive that awareness of the deepfake threat is growing. On the other hand, it’s concerning that just 29% of people are aware of deepfakes in 2022. Deepfakes have significant potential for misuse and fraud – and if people don’t know what they are, they are less likely to be prepared to identify when they are being spoofed.

What are deepfakes? Deepfakes are videos or images created using AI-powered deep learning software to show people saying and doing things that they didn’t say or do. Deepfakes are increasingly being used to commit cybercrime – this could be for financial gain, social disruption, voting fraud, or other nefarious purposes. Deepfakes are used to commit fraud and access services by pretending to be someone else, or to gain access to services they wouldn’t be able to access using their true identity. They can be used in synthetic identity fraud, new account fraud, and account takeover fraud, and more. There are many types of deepfakes – face swaps, re-enactments, or Generative Adversarial Networks (GANs) – and they be deployed in several threat types such as presentation or digital injection attacks.

Ultimately, awareness of deepfakes and an understanding of the solutions available must be expanded and discussed more widely.

How Many People Think They Could Spot a Deepfake?

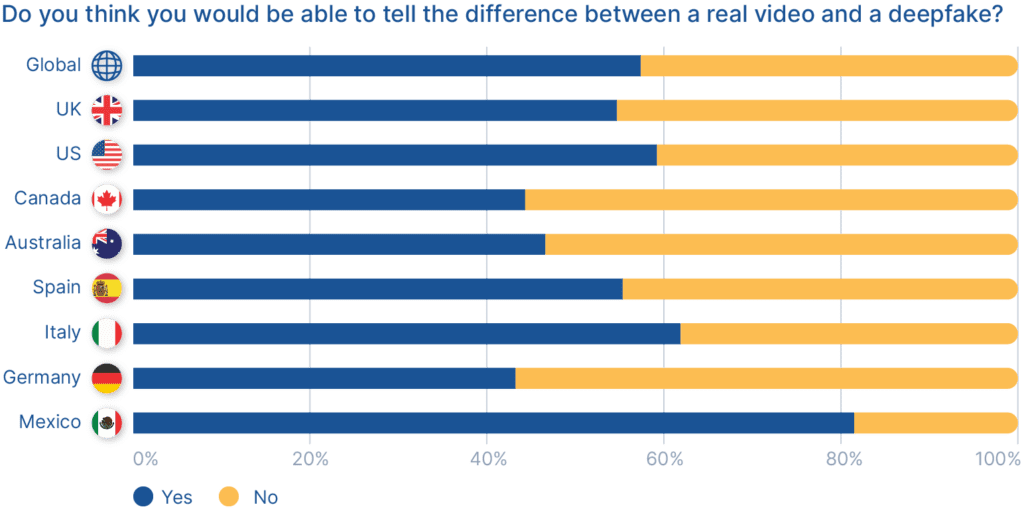

We asked: “Do you think you would be able to tell the difference between a real video and a deepfake?”

- 57% of global respondents say they think they could spot a deepfake. 43% admit they would not be able to tell the difference between a real video and deepfake.

- Respondents from Mexico are most confident — 82% believe they could tell the difference between a deepfake and real video.

- Meanwhile, respondents from Germany are least confident: 57% say that they would not be able to tell the difference.

Summary:

57% of global respondents believe they could tell the difference between a real video and a deepfake, which is up from 37% in 2019. This is concerning because the truth is that sophisticated deepfakes can be indistinguishable to the human eye. To verify a deepfake, deep learning and computer vision technologies are required to analyze certain properties, such as how light reflects on real skin versus imagery or synthetic skin.

The real problem right now is high-end deepfakes, such as the infamous Tom Cruise one for instance, which require sophisticated tools, knowledge, and time to create.

If we are over-confident in our ability to spot deepfakes, then we are more at risk of being fooled by one.

What Scares People About Deepfakes?

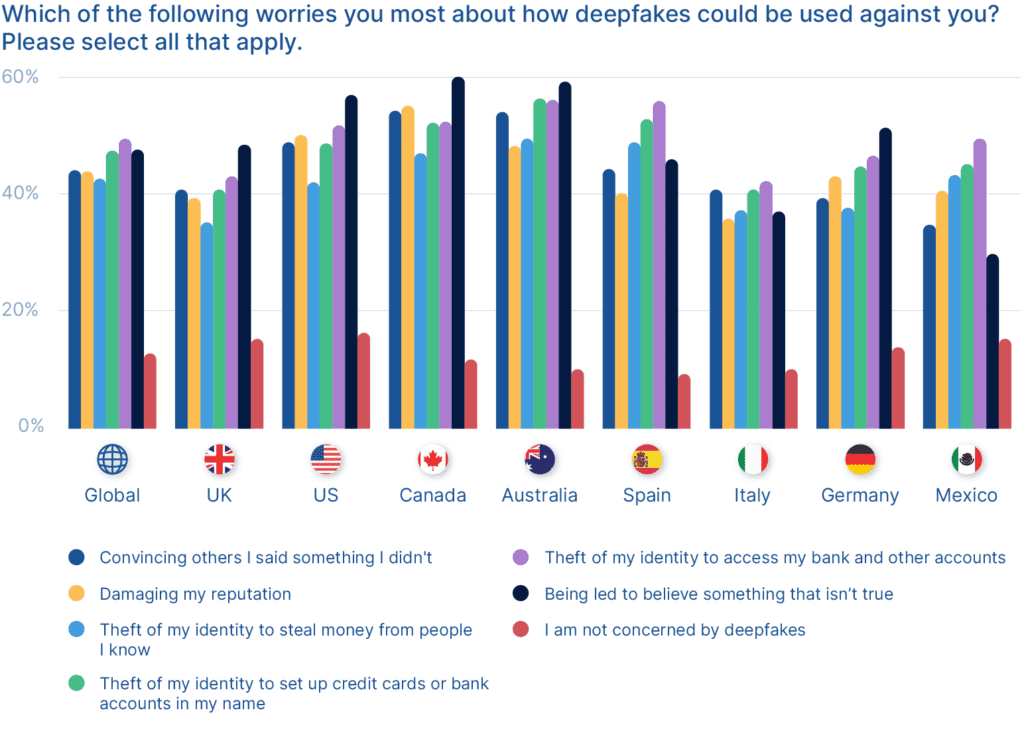

We asked respondents: “Which of the following worries you most about how deepfakes could be used against you? Please select all that apply.”

- Globally, the most popular fear surrounding deepfakes is that they could be used for “theft of my identity to access my bank and other accounts”. 50% of respondents selected this option.

- The joint runner-up was “being led to believe something that isn’t true” and “theft of my identity to set up credit cards or bank accounts in my name” with 48% selecting these options.

- Only 13% of global respondents are not concerned by deepfakes.

Summary:

People have wide-ranging fears surrounding deepfakes. The most popular themes are centered around theft and mistrust. And these fears are not misplaced: deepfakes have been used in real life to push political disinformation, harass activists, scam a CEO out of $243,000, and create fake accounts on social media to defraud genuine users.

As consumers, we continue to do more and more activities digitally, which means we need to be able to confirm our identity online. Yet the technology to create deepfakes is continually getting better, cheaper, and more readily available. That’s why deepfake protection will become more and more crucial as the deepfake threat grows, and more people become aware of the dangers.

What Do People Think About Deepfakes?

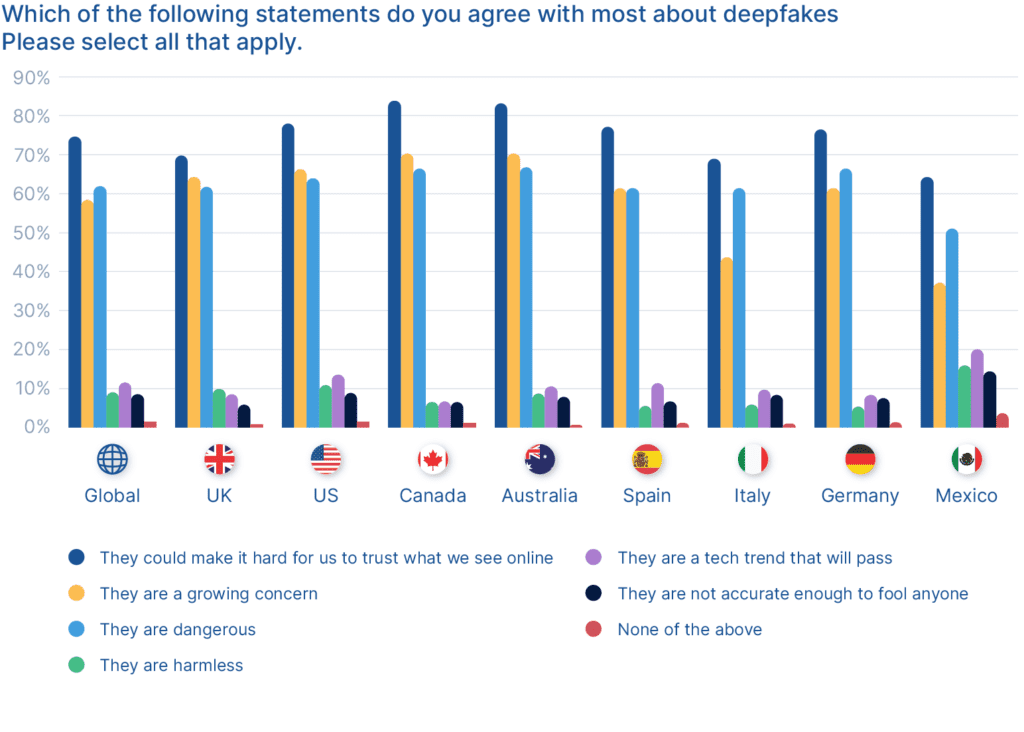

We then asked respondents: “Which of the following statements do you agree with most about deepfakes? Please select all that apply.”

- People are most concerned that deepfakes “could make it hard for us to trust what we see online”.

- The runner-up was that “deepfakes are dangerous” – 62% of global respondents agree.

- 58% also agree that deepfakes are a “growing concern”.

- Only 9% of global respondents believe that deepfakes “are not accurate enough to fool anyone”.

- Only 2% of global respondents are not concerned about any aspect of deepfakes.

Summary:

Overall, this is largely similar to the data that we collected in 2019. In 2019, 58% agreed that deepfakes were a growing concern – the exact same as in 2022. What this shows is that consumers are rightly worried about the erosion of trust online. This is the difficult problem that iProov strives to solve – our patented biometric authentication technology can assure the genuine presence of a real, verifiable individual, confirming that they are who they claim to be and that they are not a deepfake or other presentation/digital injection attack.

Think of the thing that you are least likely to ever say or do. Now imagine your friends, family, or employer being shown a convincing video of you saying or doing it. It is easy to see the potential for malicious misuse. Of course, not all deepfakes are malicious or dangerous. Many have been used for social sharing and entertainment. But they have also been employed in hoaxes, revenge porn, and increasingly, fraud and impersonation.

Recorded Future reported that a criminal is willing to pay around ~16,000 USD for the creation of a high-end deepfake. The deepfakes can then facilitate advanced social engineering attacks for a significant profit. The problem will continue to worsen as deepfake capabilities become more accessible.

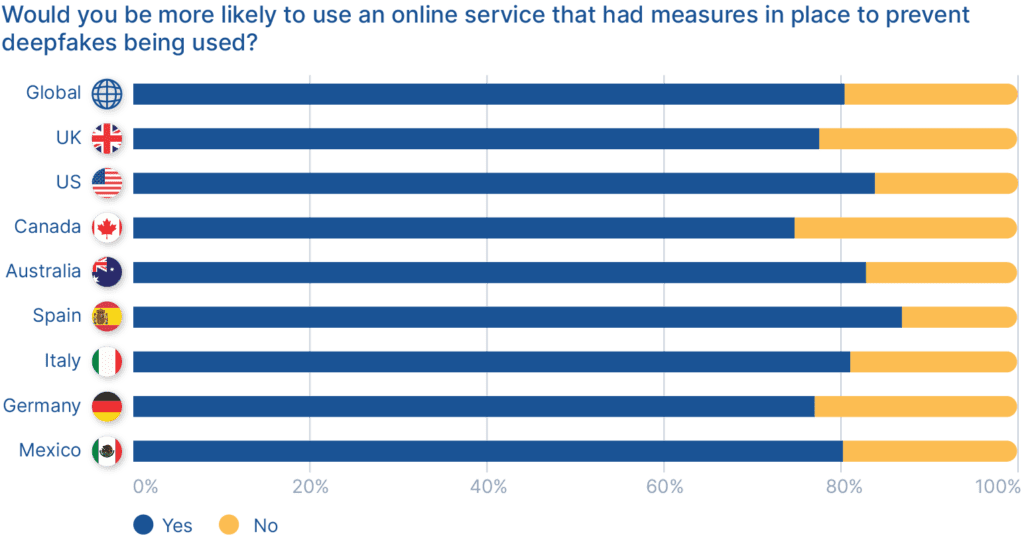

Are People More Likely to Use Services that Defend Against Deepfakes?

We asked survey respondents: “Would you be more likely to use an online service that had measures in place to prevent deepfakes being used?”

- An overwhelming majority stated that they are more likely to use online services that defend against deepfakes – 80% of global respondents agree.

- Respondents from Spain value deepfake protection the most: 87% answered “Yes”. Conversely, Canada is least convinced by deepfake prevention measures but even then, a resounding 75% answered “Yes”.

Summary:

People value deepfake protection slightly more in 2022 than they did back in 2019; overall, 75% stated that they would use an online service that could prevent deepfakes, versus 80% of global respondents in 2022.

What this demonstrates is that consumers want reassurance that they are being protected against deepfake attacks. By implementing iProov’s Dynamic Liveness technology, governments, and enterprises can deliver online verification and authentication that protects against synthetic media such as deepfakes.

How Can Biometric Authentication and Liveness Detection Defend Against Deepfakes?

Biometric authentication is used to prove that a person is who they say they are in an online interaction – such as signing into a bank account or enroling for a new online service like a government scheme.

Cybercriminals are savvy, and try an ever-increasing number of different methods to circumnavigate biometric authentication security. They might use photos or pre-recorded videos and then hold them up to a device’s camera in the form of a presentation attack, or even synthetic imagery that is digitally injected into the data stream.

Researchers are expecting criminals to increase their use of deepfakes in the coming years. This shows it is vital to understand the deepfake threat and prepare ourselves – Europol.

That’s why liveness detection is crucial. Essentially, liveness detection ensures that an online user is a real person. It uses various technologies to differentiate between genuine humans and spoof artifacts. Without liveness detection, a criminal would be able to successfully spoof a system with presented fake photographs, videos, or masks.

Not all liveness detection is created equal, however. Most liveness detection technology can detect a presentation attack – the use of physical artifacts such as masks or recorded sessions played back to the device’s camera attempting to spoof the system, and could also include a deepfake video held in front of a camera.

However, other liveness providers cannot detect a digital injection attack, which bypasses the device (mobile or desktop) camera to inject synthetic imagery into the data stream. iProov’s mission-critical liveness detection technology delivers the highest level of assurance – detecting both presented deepfakes and deepfakes used in digital injection attacks.

iProov Patented Flashmark™ technology uses controlled illumination to create a one-time biometric that cannot be recreated or reused, providing greater anti-spoofing across a range of attacks; not just standard presentation attacks but also highly scalable injection attacks using deepfakes and sophisticated replays, delivering industry-leading level of assurance that the person is real and authenticating right now.

You can read more about Dynamic Liveness here and the innovative Flashmark technology powering it here.

Want to Know More About Deepfakes?

- Report: Work From Clone: How Criminals Use Deepfakes to Apply For Remote Jobs

- Report: iProov Threat Intelligence 2024: The Impact of Generative AI on Remote Identity Verification

- Report: Identity Crisis in the Digital Age: Using Science-Based Biometrics to Combat Malicious Generative AI

- Article: How Deepfakes Threaten Remote Identity Verification Systems

Deepfake Statistics: Summary

- iProov’s consumer survey data shows that awareness of deepfakes is growing: in 2019, only 13% of consumers said they knew what a deepfake is, compared with 29% in 2022. By 2025, only 22% had never even heard of deepfakes before participating in our study. However, as deepfakes pose a threat to the online trust landscape and potentially to national security, awareness needs to be higher.

- iProov deepfake statistics also show that 57% of people believe they could spot a deepfake. But unless the deepfake is poorly constructed, this is likely to be untrue. To verify a deepfake, deep learning, and computer vision technologies are required to analyze certain properties, such as how light reflects on real skin versus synthetic skin or imagery.

- iProov data also shows that people are more likely to use services that take measures against deepfakes – 80% of global respondents agree. This is a clear wake-up call for providers of online services: to maintain or gain user trust, you must implement technology that can defend against deepfakes.

- Deepfakes are a unique and challenging threat that requires a unique solution. That’s why iProov’s patented biometric technology, Dynamic Liveness, is designed to offer advanced protection against deepfakes and other advanced attacks.

- Only iProov Dynamic Liveness can be trusted to deliver the level of assurance required for defense against deepfakes.

Find out how iProov protects against deepfakes – book your iProov demo or contact us.