November 30, 2023

Deepfakes and other synthetic media – largely created by generative AI technology – are becoming increasingly well-known, and it’s now widely accepted that synthetic media is a problem for individuals, organizations, and society alike.

Deepfakes come in many forms, such as re-enactments, face swaps, and Generative Adversarial Networks (GANs). It’s essential to understand the different forms of deepfake attacks in order to defend against them.

The issue of generative AI is particularly pressing as the frequency of these attacks is on the rise, as it becomes easier and easier to create convincing synthetic imagery. As generative AI continues to advance in sophistication, accessibility, and scalability, it will grow more difficult to trust what we see and who we interact with online.

In this article, we will demystify the often complex world of generative AI-based fraud, and explain the methodologies behind each type.

What Is Generative AI?

Generative Artificial Intelligence (AI) refers to algorithms that can generate new content – including text, images, video, or other media – in response to a given input or prompt. Often leveraging technologies such as neural networks and computer vision, generative AI learns from patterns and structure from existing “training data” in order to create content. For example, “analytical AI” serves to analyze existing data and automates the process of spotting patterns or extrapolating trends which can be useful in fields such as medicine and health data.

Generative AI has captured the technological zeitgeist, with equal amounts of awe and controversy surrounding tools such as Chat-GPT. These tools can significantly accelerate content creation, but there’s real concern around how they can be weaponized by criminals in the cybersecurity arms race – bolstering fraud and social engineering, disinformation, and cybercrime through manipulative synthetic content.

Additionally, the growing accessibility of generative AI tools in crime-as-a-service marketplaces means that less tech-savvy attackers now have easy and affordable access to sophisticated tools to create synthetic media. The highest-tech software options of yesterday are now commonplace, as the technological barrier evaporates.

A recent proof point on the quality of these AI-generated spoofs was featured in a study conducted by the Center for Strategic and International Studies, which indicates that we have “reached the inflection point where humans are unable to meaningfully distinguish between AI-generated versus human-created digital content”.

In this article, we’re focussing particularly on generative AI as used to create synthetic imagery, including deepfakes.

Understanding Different Types of Deepfake Attacks

Let’s take a moment to understand some of the forms of generative AI attacks.

Face Swaps: A form of synthetic media created using two inputs. They combine existing videos or live streams and superimpose another identity over the original feed in real time. The end result is fake 3D video output, which is merged from more than one face, but with the biometric template of the genuine individual still in tact, even if visually the resemblance is closer to that of the attacker. A face matcher without adequate defenses in place may identify the output as the genuine individual.

Re-enactments: Also known as “puppet-master” deepfakes. In this technique the facial expression and movements of the person in the target video or image are controlled by the person in the source video. A performer sitting in front of a camera guides the motion and deformation of a face appearing in a video or image. Whereas face swaps replace the source identity with the target identity (identity manipulation), re-enactments deal with the manipulation of the facial expressions of one input at a time.

Generative Adversarial Networks (GANs): A GAN works by two AI models competing with each other to create as “accurate” or authentic of a deepfake output as possible. The two models – one a generative and one a discriminating model – create and destroy in tandem. The generative model creates content based on the available training data to mimic the examples in the training data. Meanwhile a discriminative model tests the results of the generative model by assessing the probability the tested sample comes from the dataset rather than the generative model. The models continue to improve until the generated content is just as likely to come from the generative model as the training data. This method is so effective because it improves the outcome of its own authenticity by constantly checking against the very tools designed to outsmart it.

How Are Generative AI Attacks Typically Used by Fraudsters?

Creating and using generative AI-created synthetic imagery is not inherently criminal. However, synthetic imagery is unfortunately a gift for cybercriminals – aiding crime such as extortion and harassment, purposefully spreading political disinformation, or facilitating identity and document fraud (such as attempting to bypass identity verification checks mandated by Know Your Customer regulations).

Common forms of fraud supported by synthetic imagery include:

What’s the Difference Between Digitally Altered and Digitally Generated Synthetic Media?

Synthetic media is the output of generative AI, but not all synthetic media is created by generative AI.

Generative AI generates entirely new data that is unique and original, as opposed to simply processing, analyzing and modifying existing data. This distinction could be summarized as digitally generated versus digitally altered imagery.

Why Is Everyone Talking About Generative AI?

While AI can deliver an array of positive use cases – including task automation, creative inspiration, and analysis of complex data sets – the dangers of generative AI are currently taking center stage.

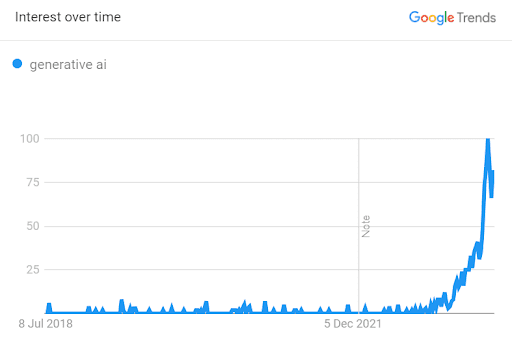

Note: Google Trends represents the number of organic searches for a specific term over time; the graph data is normalized from a range of 0 to 100 and is referred to as “interest over time”.

Note: Google Trends represents the number of organic searches for a specific term over time; the graph data is normalized from a range of 0 to 100 and is referred to as “interest over time”.

One thing is for certain: generative AI is advancing rapidly, and it promises deeply disruptive and transformational impacts across a variety of industries.

Generative AI has captured the interest of the public, policymakers, and governments alike. You can learn more about generative AI and the public sector in this article – which provides a list of responses from governments and policymakers across the globe, and details how iProov is safeguarding against the threat.

In today’s digital-first world, there is a larger digital attack surface with a greater number and variety of high-risk transactions taking place online – often meaning bigger rewards for fraudsters. Ultimately, the concern is how bad actors can utilize AI-generated synthetic media for fraudulent purposes and to facilitate the spread of false information online.

Closing thoughts on Generative AI and Biometric Face Verification

A genuine, human face is unique and cannot be truly replicated – which is why biometric face verification has emerged as the most secure and convenient method of verifying user identity online.

One thing is clear: biometric technology will serve as a lifeline for verifying genuine presence remotely, particularly as replicas can no longer be distinguished by the human eye. In truth, only the most advanced systems that have been fighting this rapidly scaling arms race are equipped to recognize generative AI.

How iProov Can Help

To verify a deepfake, iProov utilizes patented one-time biometric technology with deep learning and computer vision technologies to analyze certain properties that generative AI-created media cannot recreate – as there is no real person on the other side of the camera. This is why having a real-time biometric incorporated into liveness technology is critical for organizations to distinguish between synthetic media and genuine people.

To learn more about how fraudsters are harnessing generative AI to undermine identity verification and bolster synthetic identity fraud, read our new report “Stolen to Synthetic” here.

Book a demo or request a custom consultation with an iProov expert here today.